deep learning - Wasserstein GAN implemtation in pytorch. How to implement the loss? - Stack Overflow

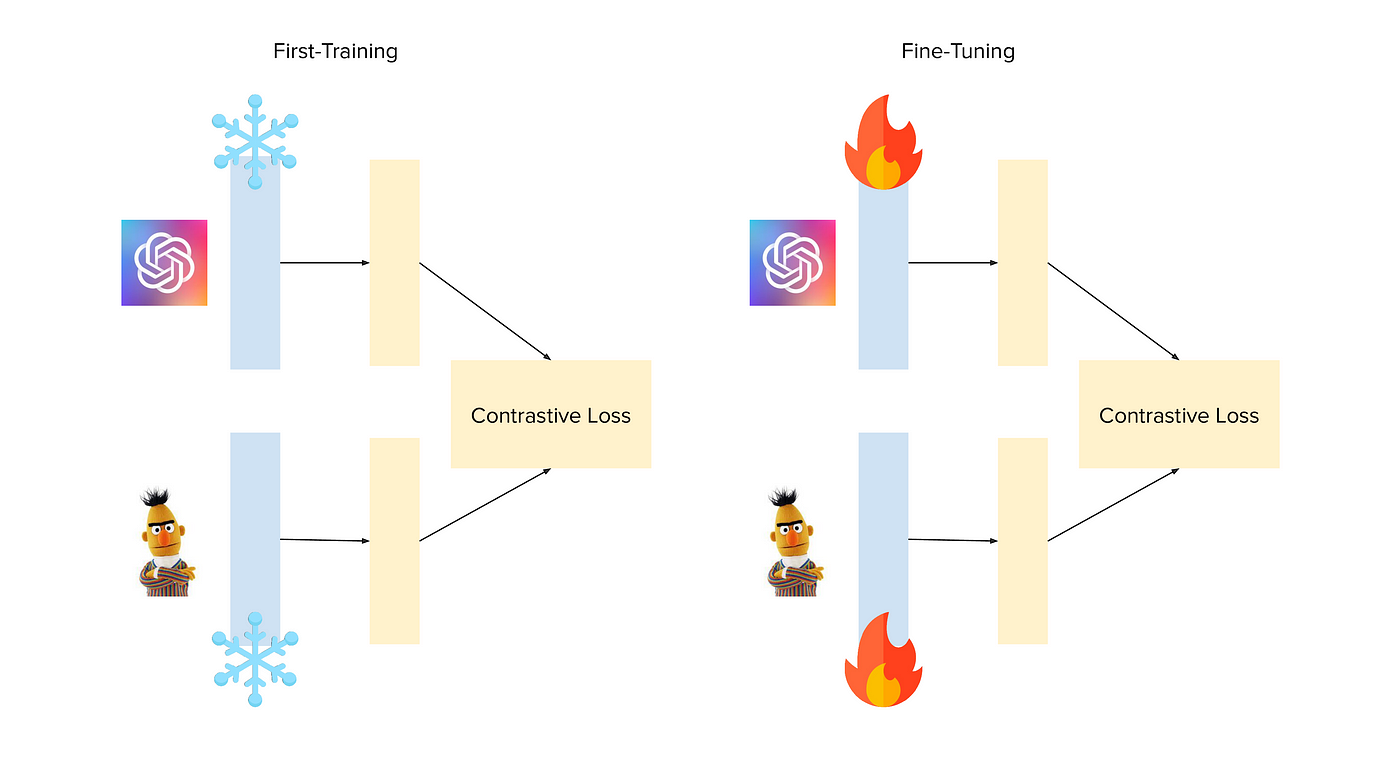

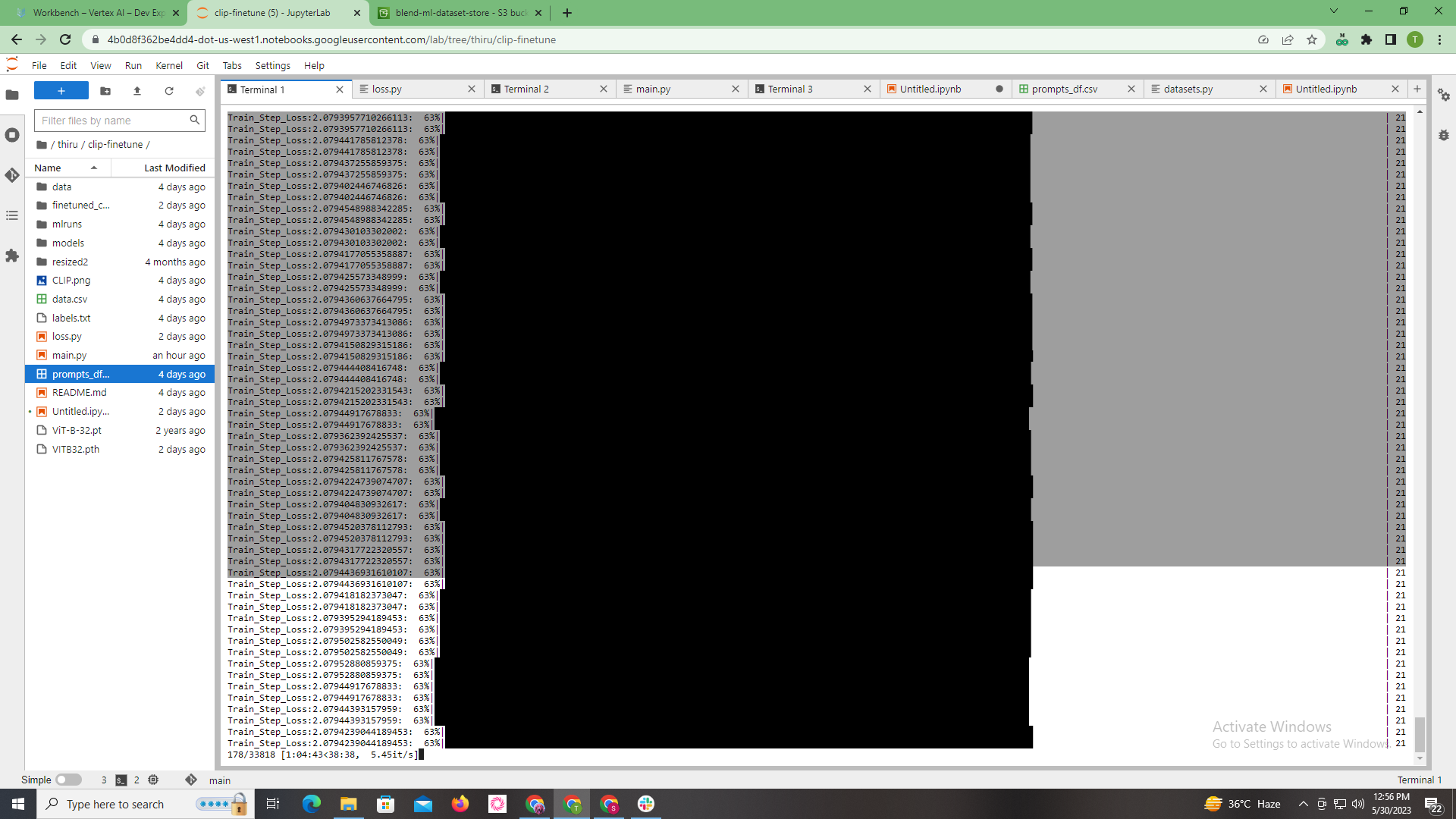

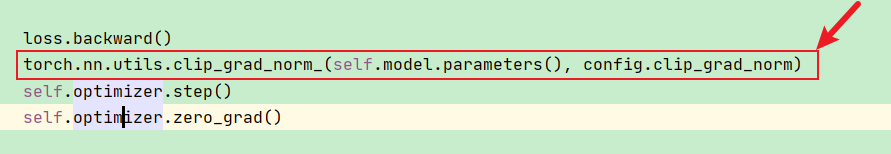

Aman Arora on X: "Excited to present part-2 of Annotated CLIP (the only 2 resources that you will need to understand CLIP completely with PyTorch code implementation). https://t.co/L0RHsvixcd As part of this

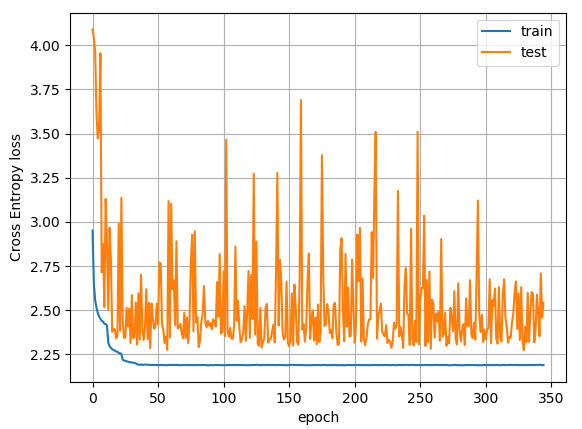

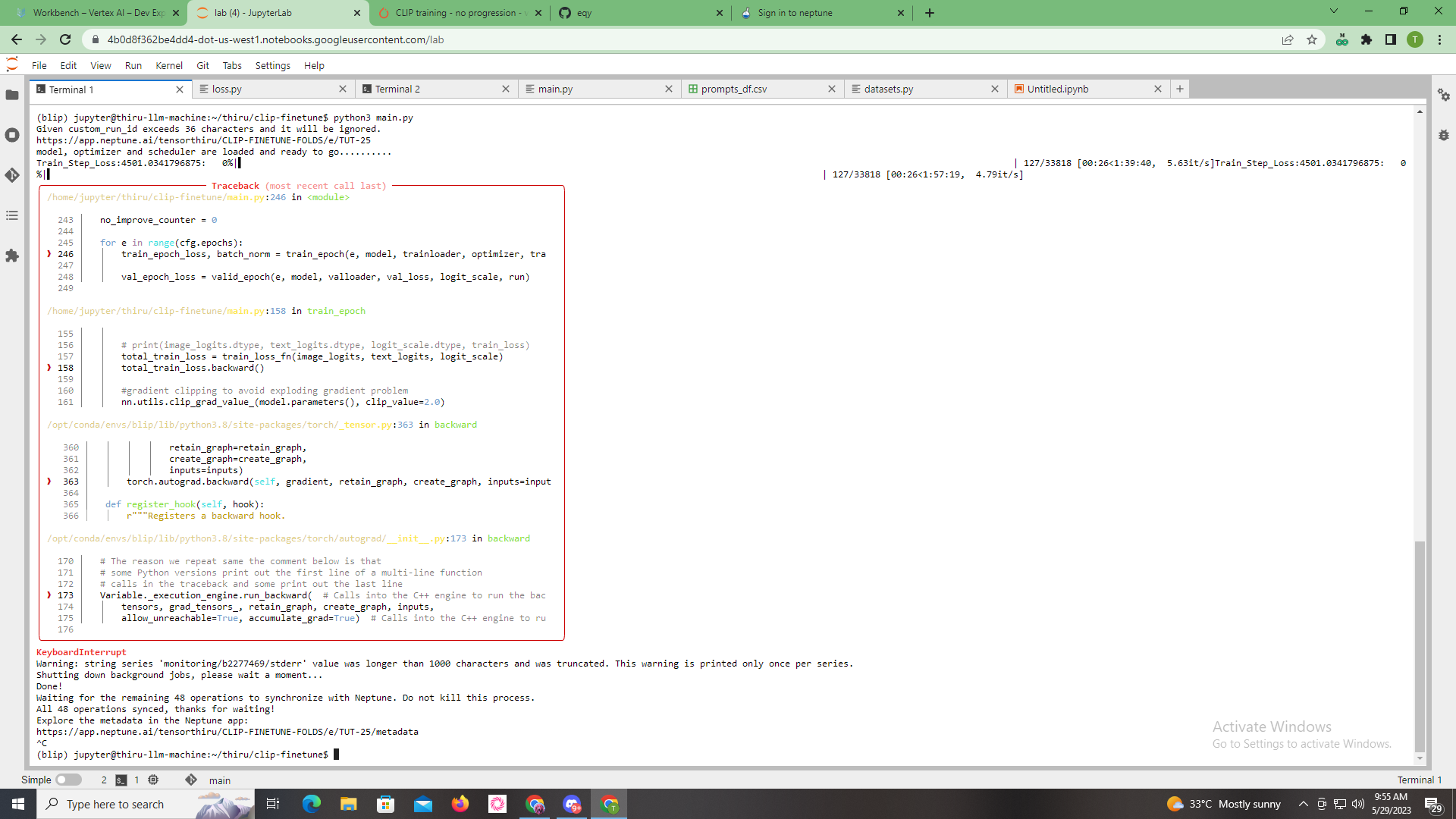

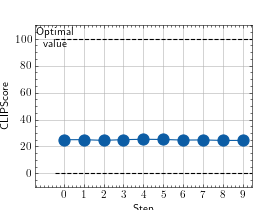

The training loss(logging steps) will drop suddenly after each epoch? Help me plz! Orz - 🤗Transformers - Hugging Face Forums

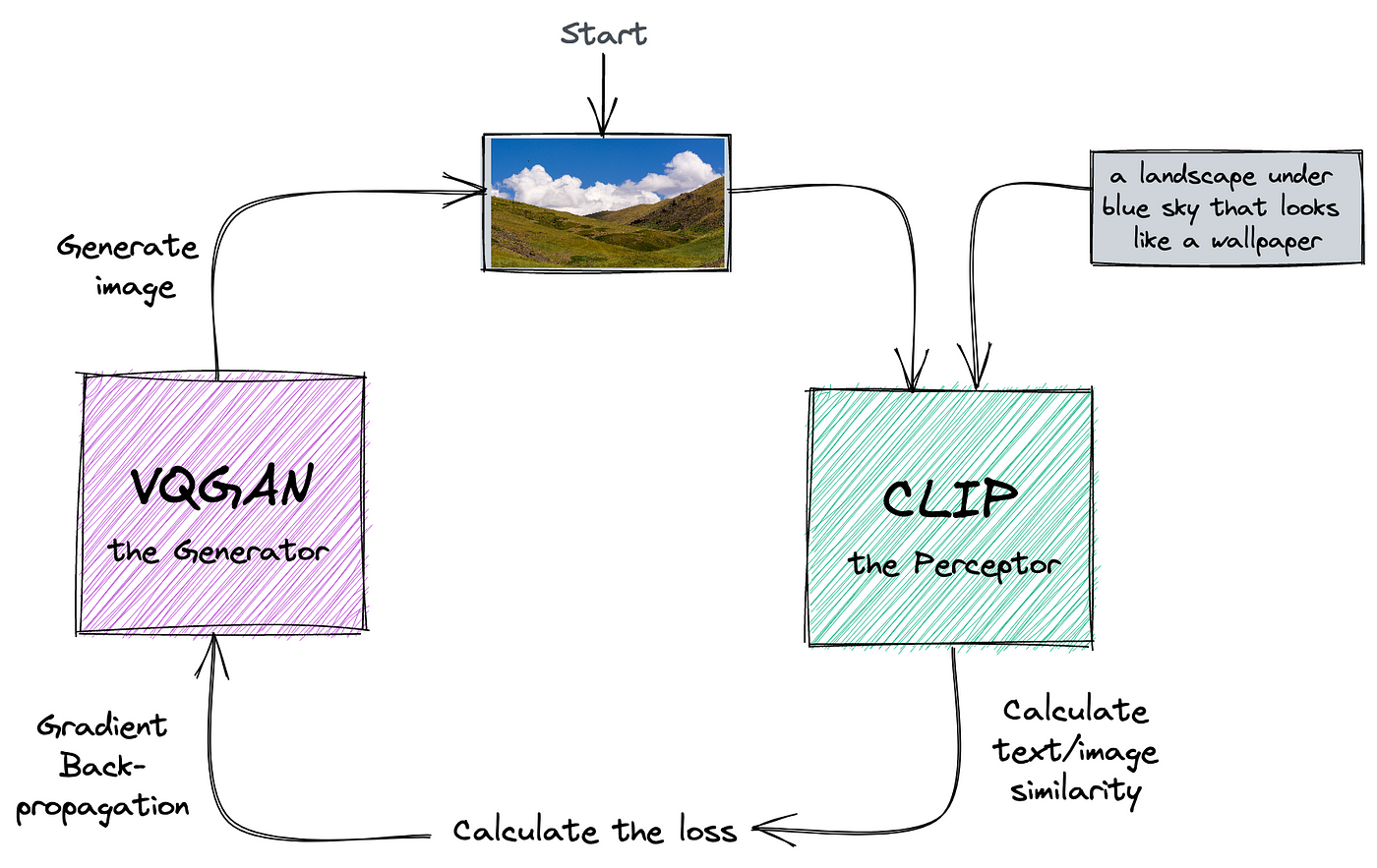

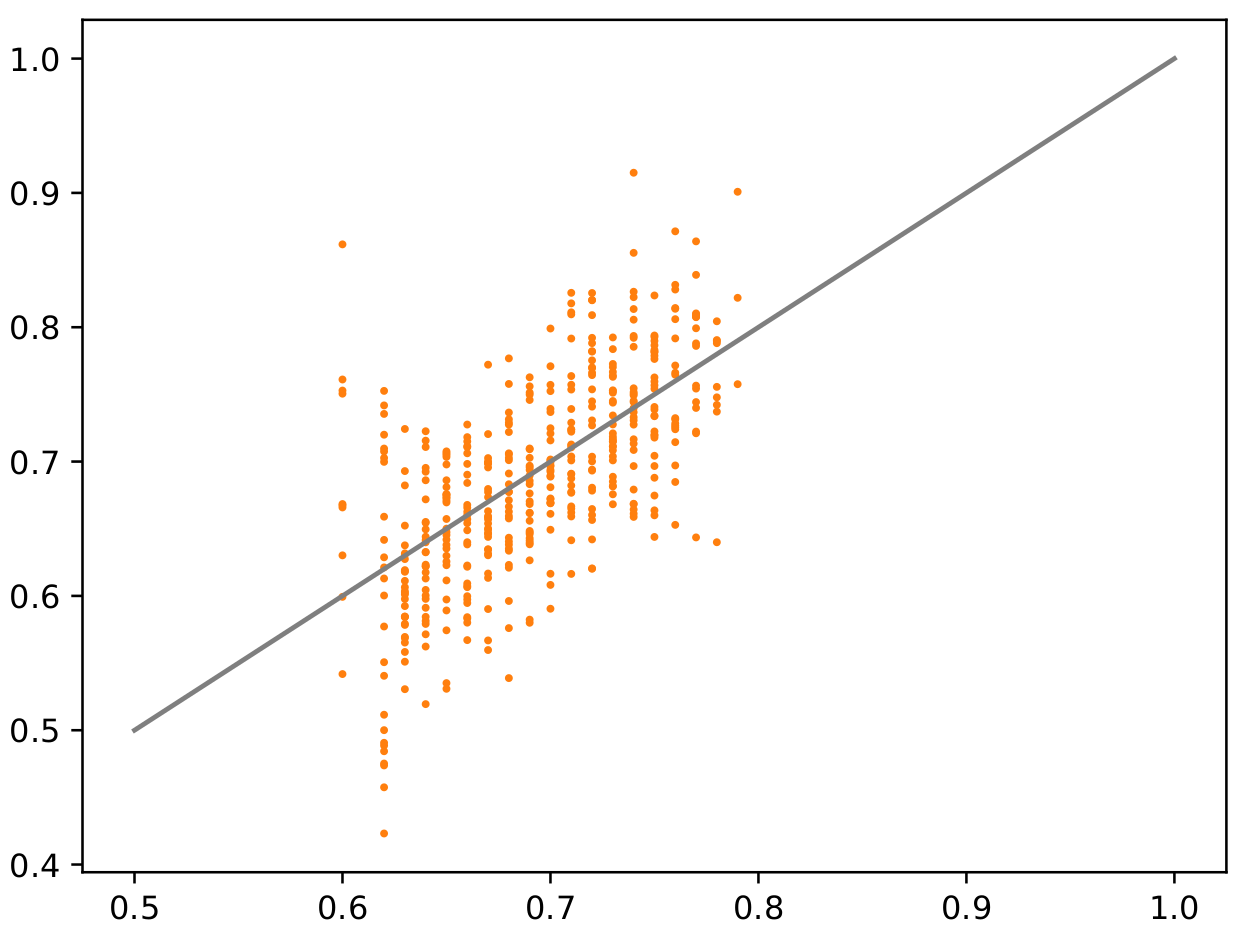

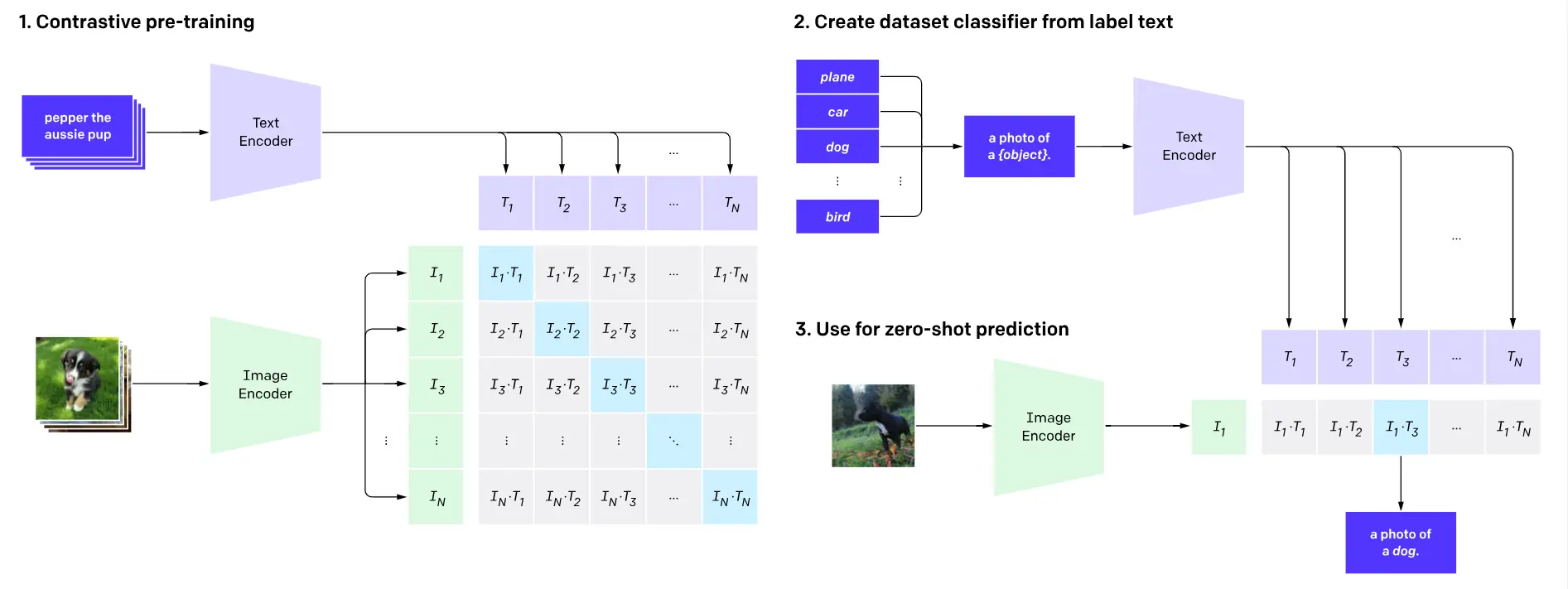

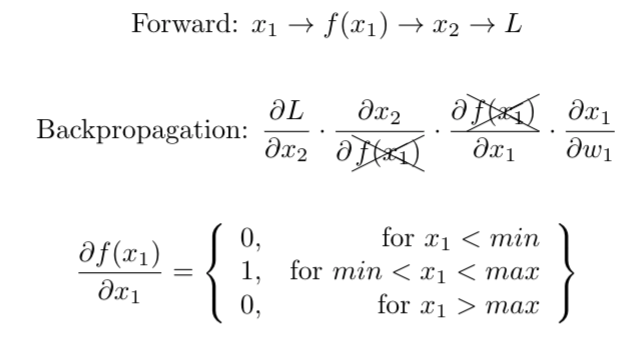

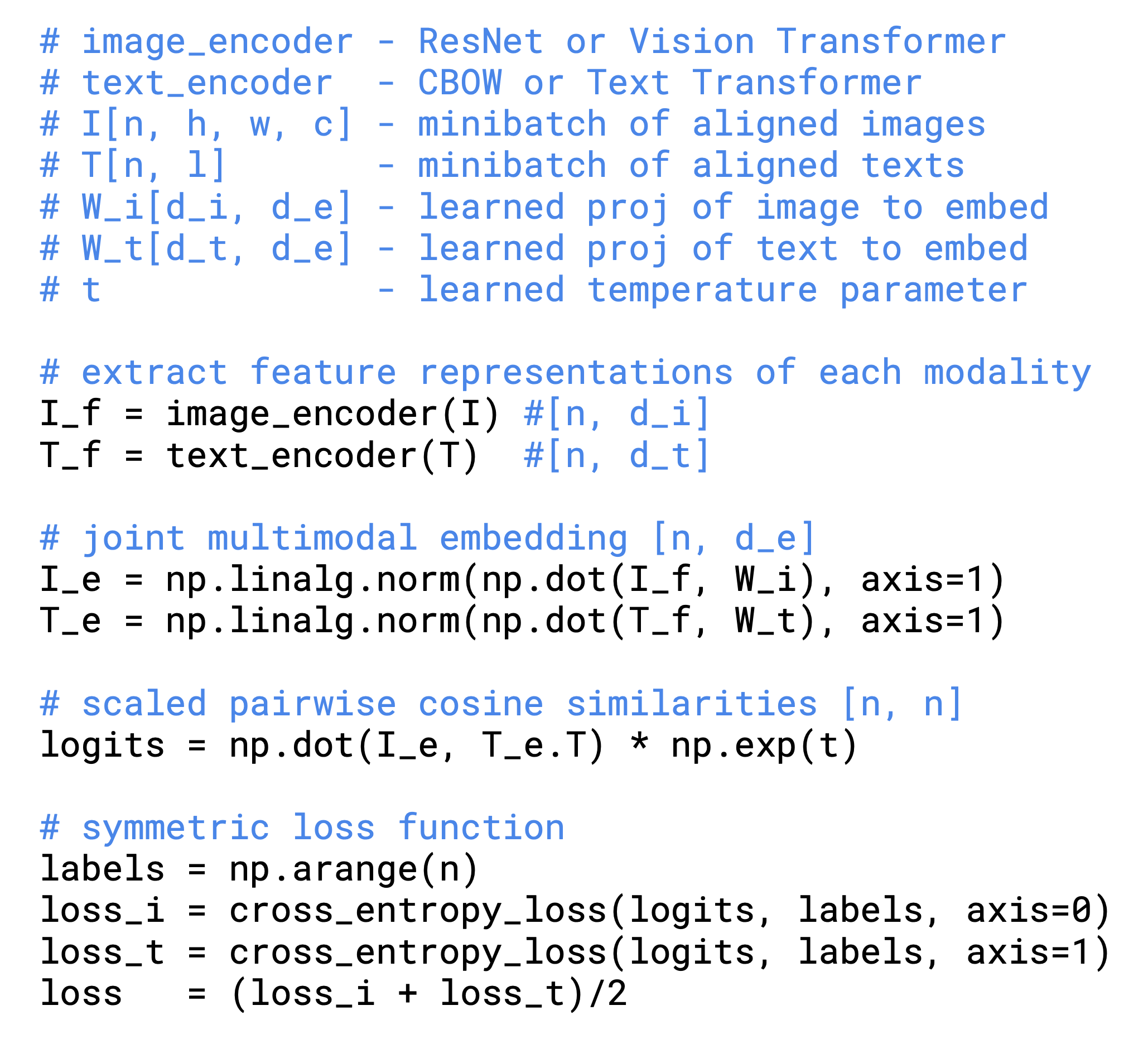

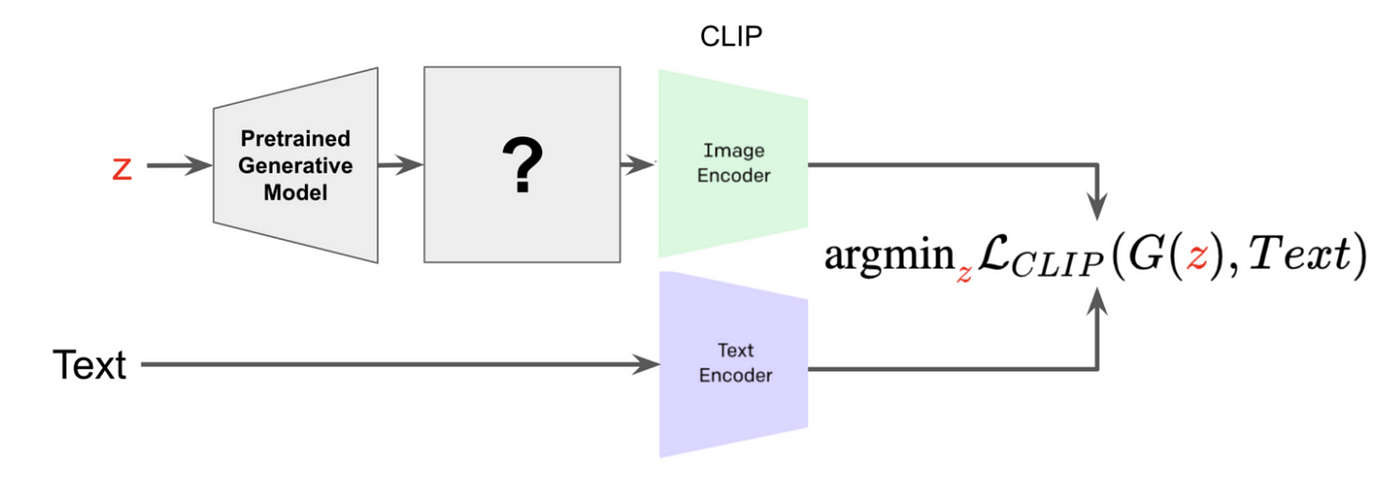

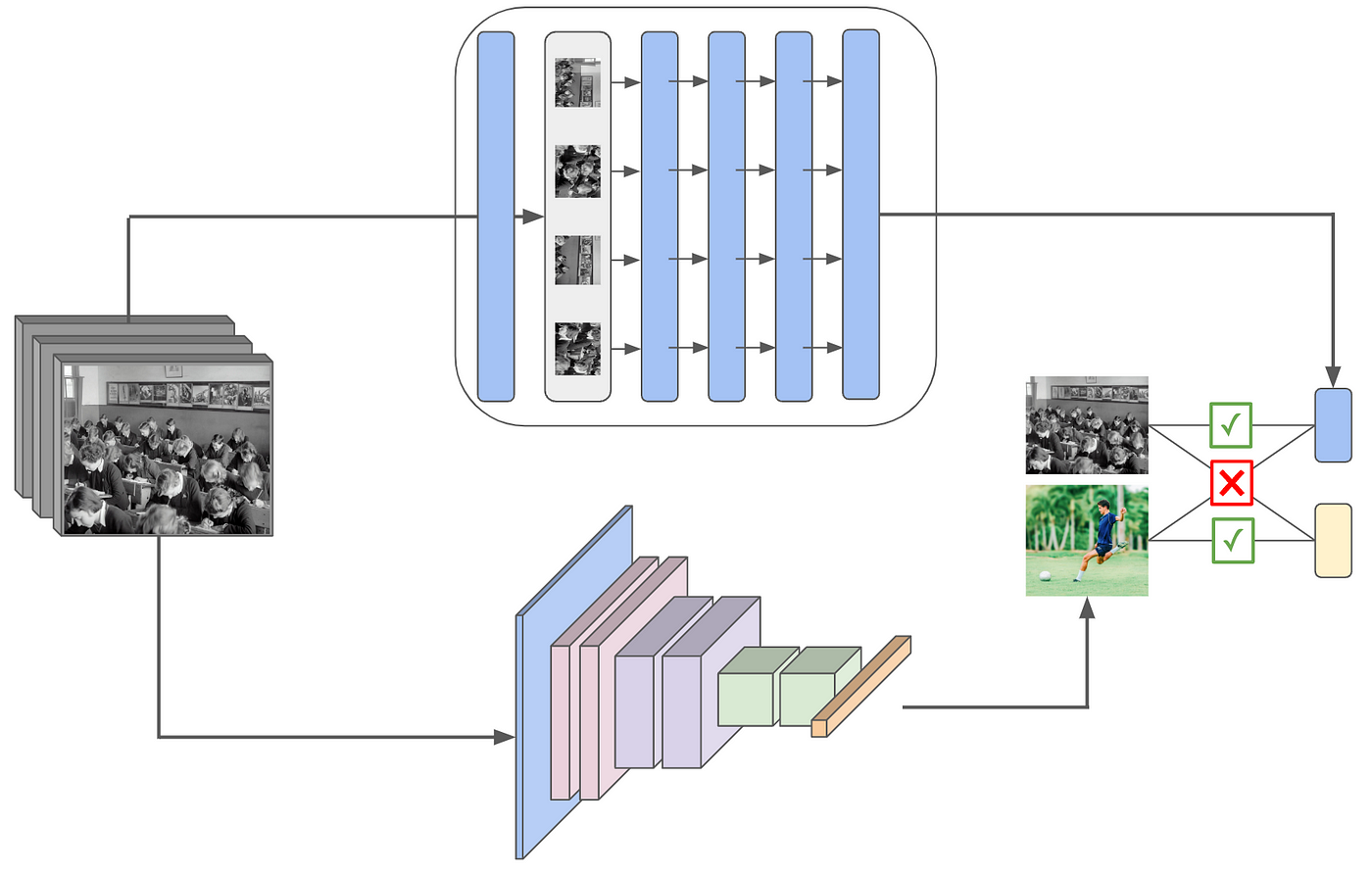

Contrastive Language–Image Pre-training (CLIP)-Connecting Text to Image | by Sthanikam Santhosh | Medium

Using CLIP to Classify Images without any Labels | by Cameron R. Wolfe, Ph.D. | Towards Data Science